AI (Artificial Intelligence) is everywhere. Second-guessing our playlists, what we want to buy, where we want to go and how to get there. Often unseen. Always present. AI can do this because it has access to Big Data. Powerful machine learning can make just about anything a lot smarter. How much data is big data? Each day, on social media alone:

- Up to a billion images uploaded to Facebook

- 100+ years of video uploaded to YouTube

- Half a billion tweets on Twitter

Not to mention Pinterest, Tumblr, Snapchat, LinkedIn and all the rest. We all know these social media giants understand our lives better than our own mothers, but we’re engineers, so let’s drill down and take a look at five ground-breaking uses of AI in the last five years.

What happens to all this data?

Well, let’s look at Facebook – all those uploaded selfies and snapshots of what we ate, where we went and what we bought.

- Each image goes through about a dozen neural networks to extract context information: faces, text, adult content, etc.

- Images are tagged and classified; that information goes on a vast data pile and is used for training more neural networks and machine learning techniques.

- As data is added, the machine learning systems get better and better at dealing with the data and drawing information and conclusions from it.

That’s how AI works: looking at examples and extracting commonalities to generalize information and put it to work. Although we’re still a long way from General Artificial Intelligence, machines have become very good at some tasks. Here are five uses of AI that have become prominent in the last five years.

1. Human image generation: seeing is believing

Maybe you’ve seen the AI-generated video of Barack Obama voicing his opinions on Black Panther and Donald Trump – or the face of Nicholas Cage on the bodies of other actors.

Generative adversarial networks (GANs) are the key. GANs can be trained to generate artificial samples that are indistinguishable from originals. Their output can fool a scout, as well as transfer different styles and poses on to a target image. Every face shown below has been artificially generated. The system learns how to do this by just looking at many, many examples of faces. Seeing as no longer believing.

2. Word embedding: beware fake news!

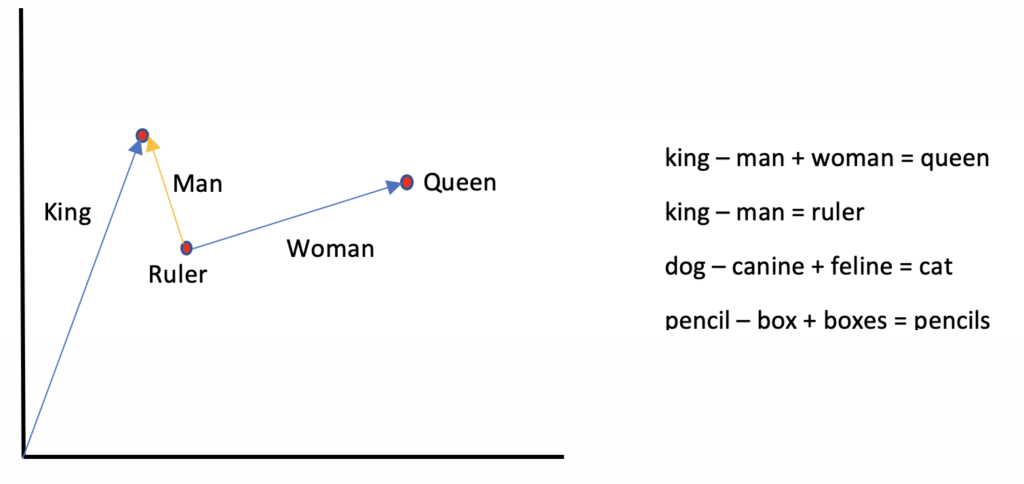

Language and mathematics are separate subjects no more. Words are converted to a vector representation that preserves their contextual meaning. You can add and subtract vectors, and that often reveals associations.

The classic example is King – Man + Woman = Queen

You can see the results of some more vector arithmetic on the same words in Figure 2.

Natural language processing has made tremendous advances using word embeddings followed by task-specific post-processing. Early in 2019, a team at OpenAI released a text predictor model (GPT-2) that is so advanced, it can generate fake news that is indistinguishable from a real news article. This language model was so fluent at generating text, OpenAI considers it to be too risky to release to the public and is releasing it in stages with a half-power model first. This really highlights the power of AI – extracting structure and associations by looking at many examples of sentences to the point where the very nature and flow of language can be modeled.

3. Sotheby’s/Christie’s AI art auction: AI pays!

“Edmond de Belamy” is an AI-generated portrait, printed on canvas, framed in gilded wood and auctioned by Christie’s for $432, 500. This work belongs to a series of generative images called La Famille de Belamy, by the Paris-based arts collective Obvious.

Robbie Barrat, a high school graduate, trained a GAN (like the one used in the first example) to generate these portraits. Although AI art seems to have taken off considerably in recent years, the term “AI Art” is a little misleading. An artist still plays a significant part in this process by assembling a series of source images to use as data to train the system. However, given enough examples, AI can extract features and styles of the source images and use these to generate something genuinely new. The amount of “imagination” that the GAN applies can be controlled to either produce something quite similar to a learned example or more abstract.

4. Defeating humans at games: the rise of the machines

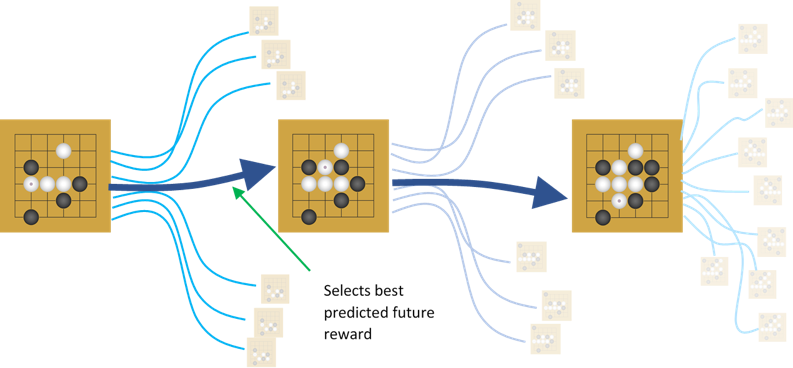

In 1997, IBM’s Deep Blue defeated world champion Gary Kasparov in chess using brute force. Following this, an astrophysicist from Princeton remarked that it would at least take a hundred years before computers and AI could beat humans in the ancient Chinese game of Go. Within two decades, DeepMind’s AlphaGo beat world Go champion Lee Sodol.

Predictions in any part of engineering are based on current knowledge, so innovation is often the instrument of their downfall. After all, in 1977, a tech company chief executive famously said, “There is no reason anyone would want a computer in their home”.

AlphaGo achieved its win by learning from thousands of human-played games and playing hundreds more against human players. DeepMind later developed AlphaGo Zero, a version of the same AI that could achieve—and surpass—the same results with “zero” human involvement (hence the name). It taught itself to play Go by playing millions of games against itself. AlphaGo Zero was later generalized to become AlphaZero. This mastered not only Go but other games including chess and shogi.

Removing the need for human input freed the AI to develop its own strategies. These strategies have proven to be so novel that they are now being adopted by human players. AI has become a teacher! In the words of AlphaZero creator David Silver, “We’ve removed the constraint of human knowledge”.

The next goal for AI game playing is real-time video games such as StarCraft II or Dota 2, where all contestants act simultaneously and not all contestants can see what other contestants do. Earlier this year, DeepMind’s AlphaStar won ten out of eleven StarCraft II games against professional players. Again, it achieved this by coming up with novel (and sometimes seemingly nonsensical) strategies.

5. AI in healthcare: what seems to be the problem?

Clinical diagnosis plays to the greatest strength of AI: big data yet again. Combining large datasets of medical imaging analysis, patient medical records and genetics to improve diagnostic outcomes. Personal health virtual assistants are not far away, with a number of AI chatbots being developed across the world.

Great success has been achieved by combining humans and AI in this area. In one case, AI can be used to pre-check scans looking for cancer, filtering cases before they reach a human expert. The AI is given a bias to towards false positives – far preferable to misdiagnosing a scan as clear. The specialist then just looks through dozens of ‘potentially positive’ scans rather than thousands. This has yielded a higher identification rate than either the AI or the human working alone. The sheer data processing power of the AI is combined with human expertise for a faster and more accurate diagnosis.

If you’re worried about having an AI analyze your symptoms, this study might put your fears to rest. Scientists trained pigeons to spot cancer in images of biopsied tissue. By combining answers from the flock of pigeons, the study found they did as well as trained human experts. This only works because 100 pigeons ‘vote’ on the answer and the accuracy of the answer is improved using statistics. With this “ask-the-audience” approach, you may get several different answers, but if a significant majority agree then they are probably right.

Statistics are at the very heart of AI. It is what enables information to be obtained from data.

Where is AI Going?

Although I have (almost arbitrarily) only listed five uses of AI here, there were many more to choose from, including brewing beer, creating food recipes (with some ‘interesting’ results) and composing music. Of course, this isn’t even touching on things like self-driving cars, smart cities, multiple applications in image processing, breakdown prediction, retail analysis, insurance, financial, self-writing software or another engineering.

The world has become obsessed with AI. Working in this field, it is difficult not to become overwhelmed by the sheer amount of money being spent and research going on in this area. You could spend your days reading paper after paper just to keep up with the state of the art.

This truly is Software 2.0. Instead of running human-written complex programs to get results, machines learn from and interpret big data to create the required functions and algorithms. Although creating AI systems is quite complex, there will come a time when AI components can be obtained as ‘off the shelf’ packages – applicable to a wide variety of tasks. At Zuken, we are excited to be at the forefront of this revolution by taking our work beyond state of the art and pushing the boundaries of this new frontier.

But what about PCBs? This is a Zuken post and I haven’t mentioned them at all. Well, watch this space. Our goals are the same as above:

- Examples interpreted to derive solutions and associations

- Controlled creativity

- AI opening possibilities not seen before

- Combining AI with human expertise

- Using AI to get faster and more accurate results

We’re working on it!